AI and Science

See Also

News

DeepMind and BioNTech build AI lab assistants for scientific research Financial Times Oct 2024 reporting on a recent Nobel Foundation event. It’s not about replacing scientists—the current systems are too inaccurate—but in the right hands it’s a tremendous accelerator.

Microsoft’s AI4Science Research arm has also been harnessing LLMs to speed up scientific discovery. Its director Chris Bishop said at a research forum this year that one of the remarkable properties of LLMs was that “they can function as effective reasoning engines”, which is particularly useful in science.

OpenAI partnered with Los Alamos: trying to get GPT-4o to handle lab tasks that involve voice and manipulation.

For example, it may be easy to know one must conduct mass spectrometry or even detail the steps in writing; it is much harder to perform correctly, with real samples.

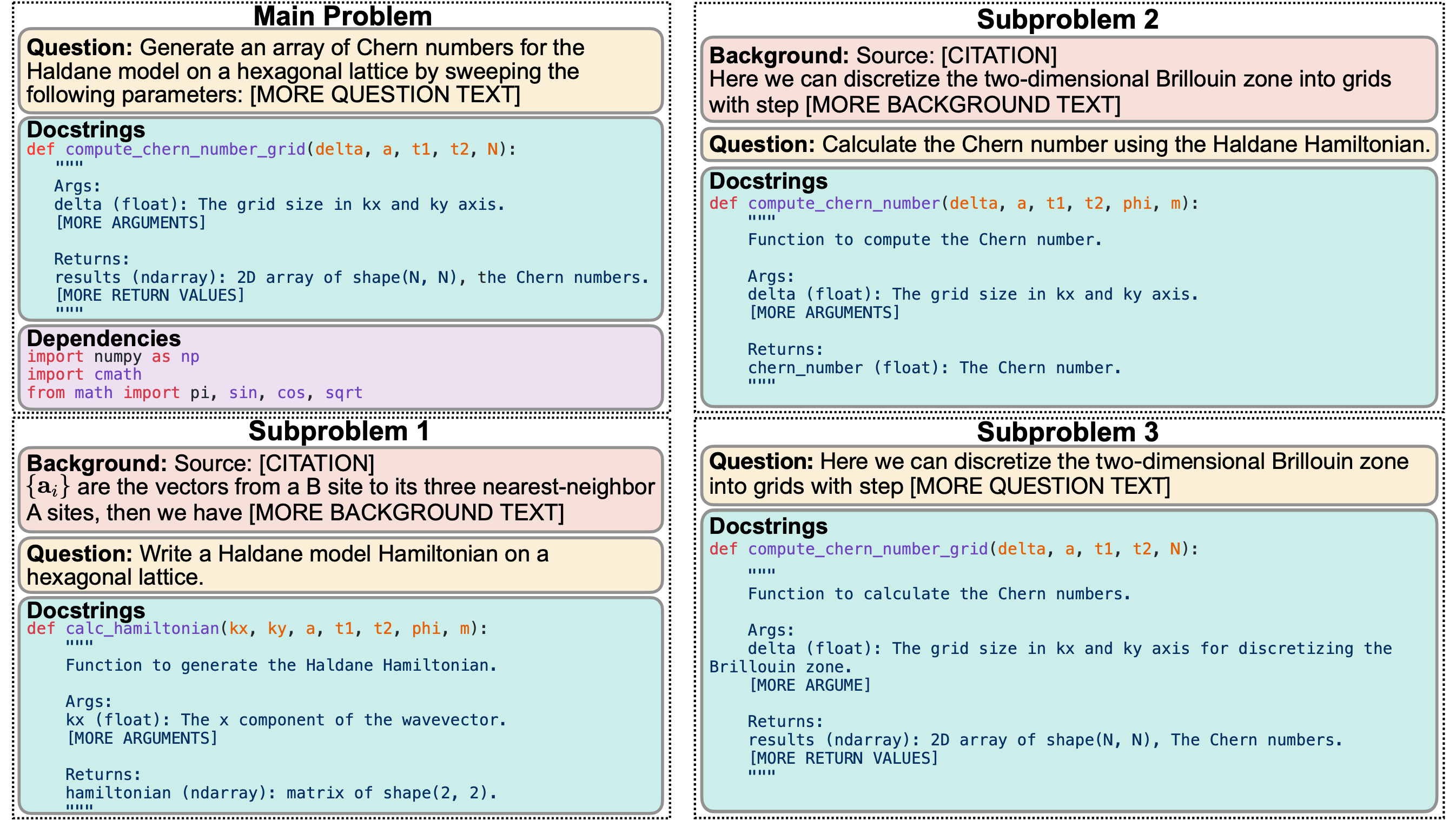

SciCode: A Research Coding Benchmark Curated by Scientists :

Dan Lemire says

You would think that these technological advances should accelerate progress. But, as argued by Patrick Collison and Michael Nielsen, science productivity has been falling despite all our technological progress. Physics is not advancing faster today than it did in the first half of the XXth century. It may even be stagnant in relative terms. I do not think that we should hastily conclude that ChatGPT will somehow accelerate the rate of progress in Physics. As Clusmann et al. point out: it may simply ease scientific misconduct. We could soon be drowning in a sea of automatically generated documents. Messeri and Crockett put it elegantly:

AI tools in science risks introducing a phase of scientific enquiry in which we produce more but understand less

BOINC from UC-Berkeley is a project that lets you allow scientific computing tasks to run on your computer in the background. It’s similar to projects like SETI@Home, but more general; it isn’t associated with a specific research project.

IBM and NASA built a science-oriented LLM trained on “60 billion tokens on a corpus of astrophysics, planetary science, earth science, heliophysics, and biological and physical sciences data. Unlike a generic tokenizer, the one we developed is capable of recognizing scientific terms such as”axes” and “polycrystalline.” More than half of the 50,000 tokens our models processed were unique compared to the open-source RoBERTa model on Hugging Face.”

both models are available on Hugging Face: the encoder model can be further finetuned for applications in the space domain, while the retriever model can be used for information retrieval applications for RAG.

Microsoft lists AI in Science as one of its big trends for 2024 including tools for sustainable agriculture., the world’s largest image-based AI model to fight cancer and using advanced AI to find new drugs for infectious diseasesand new molecules for breakthrough medicines.

Implications

An economics grad student interviewed 1000 scientists at an R&D department in a major science organization and concluded:

- AI increases scientist productivity, including novelty

- AI helps top scientists the most

- Automates the “idea generation phase”

I wonder how much of the improvement is a one-off…fancy new toy and they take advantage of it for a while until their productivity plateaus again

Artificial Intelligence, Scientific Discovery, and Product Innovation by Aidan Toner-Rodgers from MIT (PDF) and see his X summary

AI and the transformation of social science research

Grossmann et al. (2023)

A well-summarized take. See also the extensive references, including an apparently exhaustive list of examples of AI in social sciences research.

LLMs can allow the kinds of simulations that until now were limited to domains that allowed numerical quantification – e.g. particle physics, epidemiology, economics. But with its ability to respond to surveys might make it a way to simulate research into human behavior.

Important caveat: “Already, LLM engineers have been fine-tuning pretrained models for the world that “should be” (12) rather than the world that is, and such efforts to mitigate biases in AI training (2, 13) may thus undermine the validity of AIassisted social science research.”

See ABM: Agent-based models are composed of: (1) numerous agents specified at various scales; (2) decision-making heuristics; (3) learning rules or adaptive processes; (4) an interaction topology; and (5) an environment.

via Penn Today

Discovery for science and beyond

Sakana AI The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery

https://arxiv.org/abs/2408.06292

Lu et al. (2024)

But of course The Zvi says it’s super-dangerous.

Tips

Nature Aug 2024 offers science-writing tips, plus this prompt for writing abstracts.

You are a professional copy editor with ample experience handling scientific texts. Revise the following abstract from a manuscript so that it follows a context–content–conclusion scheme. (1) The context portion communicates to the reader the gap that the paper will fill. The first sentence orients the reader by introducing the broader field. Then, the context is narrowed until it lands on the open question that the research answers. A successful context section distinguishes the research’s contributions from the current state of the art, communicating what is missing in the literature (that is, the specific gap) and why that matters (that is, the connection between the specific gap and the broader context). (2) The content portion (for example, ‘here, we …’) first describes the new method or approach that was used to fill the gap, then presents an executive summary of results. (3) The conclusion portion interprets the results to answer the question that was posed at the end of the context portion. There might be a second part to the conclusion portion that highlights how this conclusion moves the broader field forward (for example, ‘broader significance’).

Software

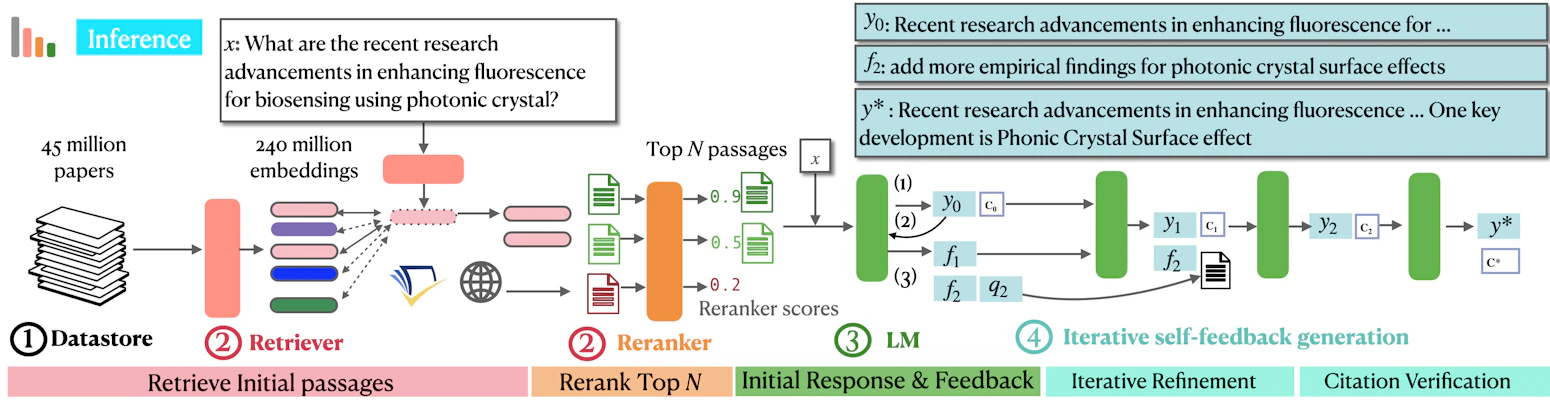

Ai2 OpenScholar: Scientific literature synthesis with retrieval-augmented language models Allen Institute for AI (Ai2) built “OpenScholar is a retrieval-augmented language model (LM) designed to answer user queries by first searching for relevant papers in the literature and then generating responses grounded in those sources.”

OpenAlex is a search engine like Google Scholar, but with a free API.

We index over 250M scholarly works from 250k sources, with extra coverage of humanities, non-English languages, and the Global South.

We link these works to 90M disambiguated authors and 100k institutions, as well as enriching them with topic information, SDGs, citation counts, and much more

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. (More details)

Elicit.org search scientific papers with AI

Paper-QA is a Github with examples for how to do scientific research reviews > minimal package for doing question and answering from PDFs or text files (which can be raw HTML). It strives to give very good answers, with no hallucinations, by grounding responses with in-text citations.

Lateral

https://www.lateral.io/

https://www.lateral.io/

Complete hours of reading in minutes. A web app that helps you read, find, share & organise your research in one place. So you can complete it up to 10x faster.

Zeta Alpha

https://www.zeta-alpha.com/ an Amsterdam-based company that claims “the best Neural Discovery Platform for AI and beyond. Use state-of-the-art Neural Search to improve how you and your team discover, organize and share knowledge.”

https://www.zeta-alpha.com/ an Amsterdam-based company that claims “the best Neural Discovery Platform for AI and beyond. Use state-of-the-art Neural Search to improve how you and your team discover, organize and share knowledge.”

see Sara Hamburg tweet.

https://www.scholarcy.com/

Scispace

I uploaded directly from my Zotero Library.

But when I asked it a basic question “What are the nutritional differences between frozen and fresh blueberries”

Worse, when I asked it:

“how does freezing impact the nutritional profile of blueberries”

The top answers related to electric vehicles, and “freezing” a pension plan.

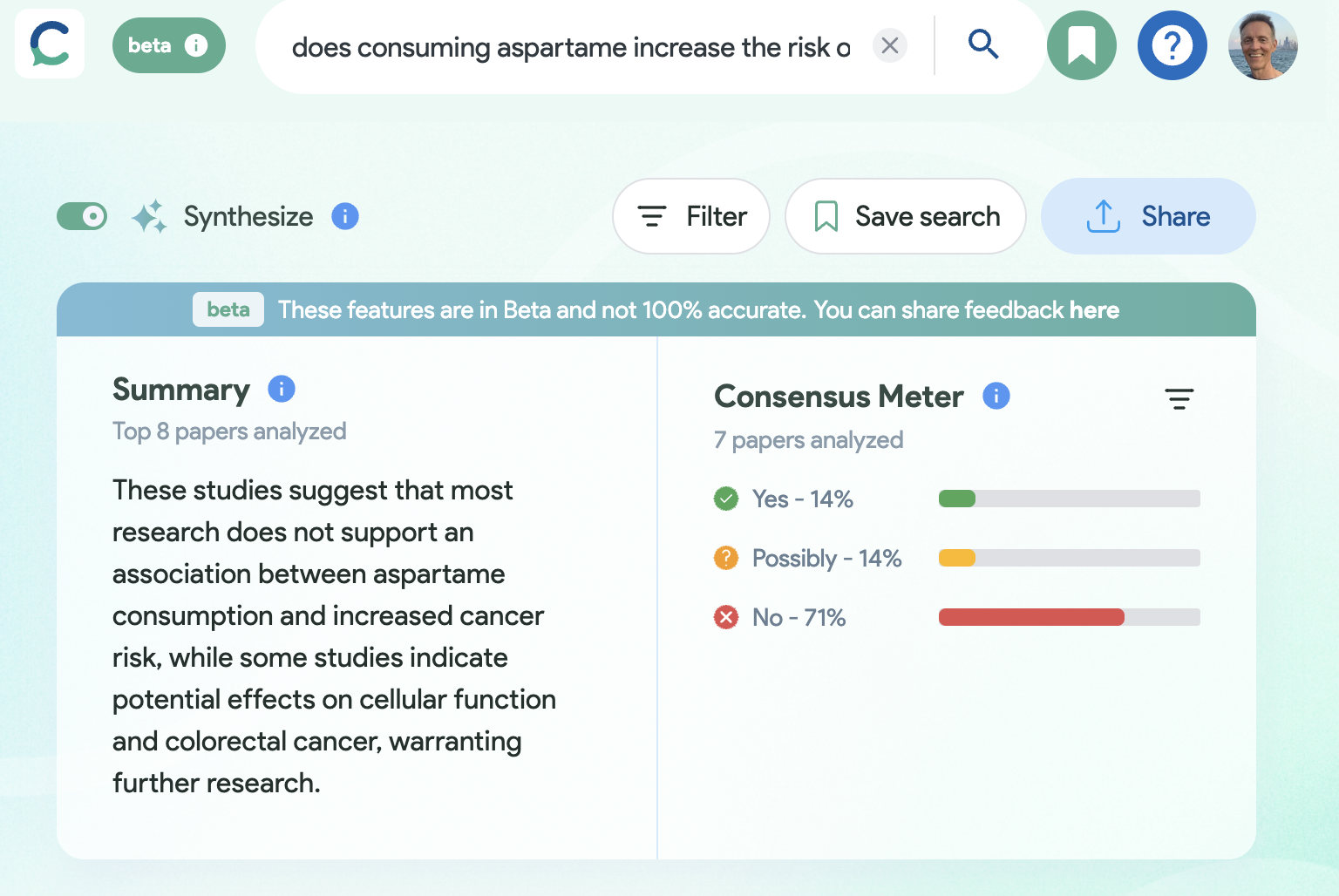

Consensus

Consensus is free for unlimited searches and AI-powered filters, plus 20 credits per month for the more advanced features like GPT-4 summaries and the Consensus Meter. $9 / month for everything, about half that for students.

Try “does consuming aspartame increase the risk of cancer?”

Apply filters, like this one that limits to studies that are validated with RCT:  ### Scite_

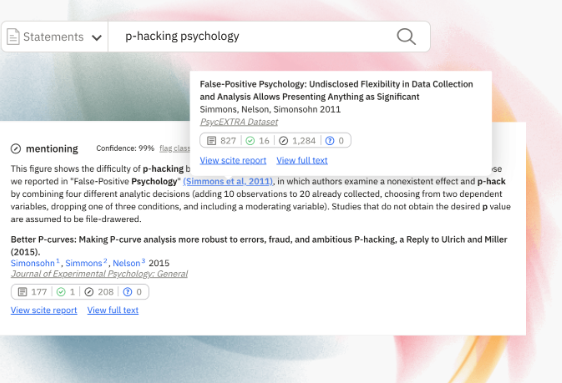

### Scite_

1.2b citation statements extracted and analyzed 181m articles, book chapters, preprints, and datasets

Smart Citations allow users to see how a publication has been cited by providing the context of the citation and a classification describing whether it provides supporting or contrasting evidence for the cited claim

Interview with the developer of _Site

Google’s FunSearch: Making new discoveries in mathematical sciences using Large Language Model

Romera-Paredes et al. (2024)

FunSearch works by pairing a pre-trained LLM, whose goal is to provide creative solutions in the form of computer code, with an automated “evaluator”, which guards against hallucinations and incorrect ideas. By iterating back-and-forth between these two components, initial solutions “evolve” into new knowledge. The system searches for “functions” written in computer code; hence the name FunSearch.

[podcast] Bradley Love on AI and Science

#weather #forecast A neural network-based weather prediction model is almost as good as the supercomputer-running versions, at least for one to ten-day forecasts, but a fraction of the code and computing resources: https://www.nature.com/articles/s41586-024-07744-y