Future Predictions

How Good Will It Get

We all agree that this new technology is important and consequential, perhaps even at the once-in-a-generation level. But the real question of interest to investors and decision-makers is how good? If we can understand some of the more realistic scenarios, then it’s possible to make informed strategic or investment bets.

It’s easy to get lost in fantasies about an “AI Takeoff” where all of business and society are permanently transformed, for good or bad. Microsoft and other companies are watching their stock price soar due to their “all-in” bets on the technology. But “bold” or aggressive bets don’t always play out as intended: ask Facebook (er, Meta) about their bets on the metaverse, or any of the thousands of crypto investors still waiting for their predicted transformations.

To understand the implications we need a better understanding of

- The current state-of-the-art, especially a clearer picture of what’s really new and special.

- Likely performance improvements, in the short and medium term (we’ll go out on a limb and speculate that nobody knows the long term).

- Philosophical underpinnings. Although this might appear too theoretical, when dealing with something purports to replace something that fundamental as human intelligence, it’s good to consider some of frameworks people have historically used to discuss what makes humans human.

Sometimes, especially in the short run, it’s useful to understand the conventional wisdom, if only because investors tend to run in herds.

Along the way we’ll also track some of the more speculative ideas.

See Also

The Future of AI

The Future of Content in an AI-generated World

Rodney Brooks AI Scorecard: a far less optimistic view of the future, from a guy who’s been active in the field since the 1980s.

Gary Marcus agrees, at least for self-driving cars.

Uber and Lyft can stop worrying about being disintermediated by machines; they will still need human drivers for quite some time.

and also Gary Marcus recommends:

For example, you should really check out Macarthur Award winner Yejin Choi’s recent TED talk. She concludes that we still have a long way to go, saying for example that “So my position is that giving true … common sense to AI, is still moonshot”. I do wish this interview could have at least acknowledged that there is another side to the argument.)

His March 2022 Nautilus article says Deep Learning is Hitting a Wall because they fundamentally rely on scaling to solve all the shortcomings. Instead, Marcus thinks we need to go back to symbolic manipulation (neurosymbolic solutions)

Deep-learning systems are outstanding at interpolating between specific examples they have seen before, but frequently stumble when confronted with novelty.

but hybrid approaches might work better. Some of this is inspired by practical work at making existing software work better, such as spell checkers:

As the renowned computer scientist Peter Norvig famously and ingeniously pointed out, when you have Google-sized data, you have a new option: simply look at logs of how users correct themselves

And many of the best models of all use symbolic methods combined with neural networks

Artur Garcez and Luis Lamb wrote a manifesto for hybrid models in 2009, called Neural-Symbolic Cognitive Reasoning. And some of the best-known recent successes in board-game playing (Go, Chess, and so forth, led primarily by work at Alphabet’s DeepMind) are hybrids. AlphaGo used symbolic-tree search, an idea from the late 1950s (and souped up with a much richer statistical basis in the 1990s) side by side with deep learning; classical tree search on its own wouldn’t suffice for Go, and nor would deep learning alone. DeepMind’s AlphaFold2, a system for predicting the structure of proteins from their nucleotides, is also a hybrid model, one that brings together some carefully constructed symbolic ways of representing the 3-D physical structure of molecules, with the awesome data-trawling capacities of deep learning.

For more see What AI Can’t Do

Ed Zitron thinks we’ve reached Peak AI because all the hype over AI in the past year has not resulted in meaningful revenue or even replacement of workers.

Despite fears to the contrary, AI does not appear to be replacing a large number of workers, and when it has, the results have been pretty terrible. A study from Boston Consulting Group found that consultants that “solved business problems with OpenAI’s GPT-4” performed 23% worse than those who didn’t use it, even when the consultant was warned about the limitations of generative AI and the risk of hallucinations.

That fuss about Klarna replacing its customer service with AI? Note that the company itself says that AI is driving $40M of profit improvement (as opposed to, say, actually bringing in $40M).

A Sept 2023 Boston Consulting Group study on 750 of its consultants concluded that AI brings improved performance on tasks involving creative ideation, but that on “business problem solving” it actually underperformed by almost 25%.

MIT economist Daron Acemoglu says Don’t Believe the AI Hype1 . He estimates the share of tasks affected by AI and related technologies:

Using numbers from recent studies, I estimate this to be around 4.6%, implying that AI will increase TFP by only 0.66% over ten years, or by 0.06% annually. Of course, since AI will also drive an investment boom, the increase in GDP growth could be a little larger, perhaps in the 1-1.5% range.

Taken together, this research suggests that currently available generative-AI tools yield average labor-cost savings of 27% and overall cost savings of 14.4%.

Thus, I estimate that about one-quarter of the 4.6% tasks are of the “harder-to-learn” category and will have lower productivity gains. Once this adjustment is made, the 0.66% TFP growth figure declines to about 0.53%.

Goldman-Sachs Jun 25, 2024 report GenAI: Too Much Spend Too Little Benefit co-authored by respected long-time semiconductor analyst Jim Covello, interviews Acemoglu and offers additional details about why the $1T spend is unlikely to create $1T of benefits. This is not like the smartphone, where (contrary to received wisdom today) insiders were very aware of its transformational affects, or the internet, where small amounts of spending could compete with expensive bricks-and-mortar right away. Despite the hype and money spent, it’s hard to think of any real money-making business generated by AI two years after ChatGPT.

See much more by Ed Zitron at Pop Culture and theZvi counterarguments.

but Maxwell Tabarrok in Contra Acemoglu on AI: The Simple Macroeconomics of AI Ignores All The Important Stuff counters that Acemoglu underestimates

Gains from “deepening automation”, raising capital productivity in already-automated tasks. (eg. if transformers enable Google to provide better search results with fewer computing resources)

Economic gains from new tasks, which he ignores only because these might come with negative side effects (e.g. the way social media increased societal mistrust)

New scientific breakthroughs, which Acemoglu ignores only because they tend to take ten years or more. But simply improving the efficiency of existing science could itself accelerate breakthroughs.

And long-time industry veteran Glyph offers A Grand Unified Theory of the AI Hype Cycle](https://blog.glyph.im/2024/05/grand-unified-ai-hype.html?utm_source=tldrai). Step 9 is the key one:

- Competent practitioners — not leaders — who have been successfully using

Nin research or industry quietly stop calling their tools “AI”, or at least stop emphasizing the “artificial intelligence” aspect of them, and start getting funding under other auspices. WhateverNdoes that isn’t “thinking” starts getting applied more seriously as its limitations are better understood. Users begin using more specific terms to describe the things they want, rather than calling everything “AI”.

Zvi says > Andrew Ng confirms that his disagreements are still primarily capability disagreements, saying AGI is still ‘many decades away, maybe even longer.’ Which is admittedly an update from talk of overpopulation on Mars. Yes, if you believe that anything approaching AGI is definitely decades away you should be completely unworried about AI existential risk until then and want AI to be minimally regulated. Explain your position directly, as he does here, rather than making things up. >

Data and LLM Futures

AI and Persuasion

Ethan Mollick points to

In a randomized, controlled, pre-registered study GPT-4 was better able to change people’s minds during a conversational debate than other humans, at least when it is given access to personal information about the person it is debating (people given the same information were not more persuasive). The effects were significant: the AI increased the chance of someone changing their mind by 87% over a human debater. This might be why a second paper found that GPT-4 could accomplish a famously difficult conversational task: arguing with conspiracy theorists. That controlled trial found that a three-round debate, with GPT-4 arguing the other side, robustly lowers conspiracy theory beliefs. Even more surprisingly, the effects persist over time, even for true believers.

Advertising

Internet pontificator and banned-from-Facebook Louis Barclay speculates how AI poses a huge threat to ad-based platforms by slashing how many ads we see. A provocative read without any particular insights.

#advertising

Jobs

#employment #economics

Jun 2024 Andrew McAfee The Robots Won’t Cause Massive Unemployment This Time, Either (h/t Kevin Kelly) mentions how the number of translators is actually increasing, despite AI, and explains this is because new markets open up with cheaper translation, and many of those new markets require human review. See AI Language Translation

March 2024 Noah Smith writes a lengthy and persuasive case for why AI will create plentiful high-paying jobs

If AI/LLM development remains capital intensive, this will tend to increase the power of institutions (corporations, governments) that can afford the capital, and reduce the power of individual researchers and employees. Over time, as the skills to develop and use AI diffuse into the population, this should reduce the salaries and prestige of top researchers.

See more at #podcast Conversations with Tyler: Michael Nielsen

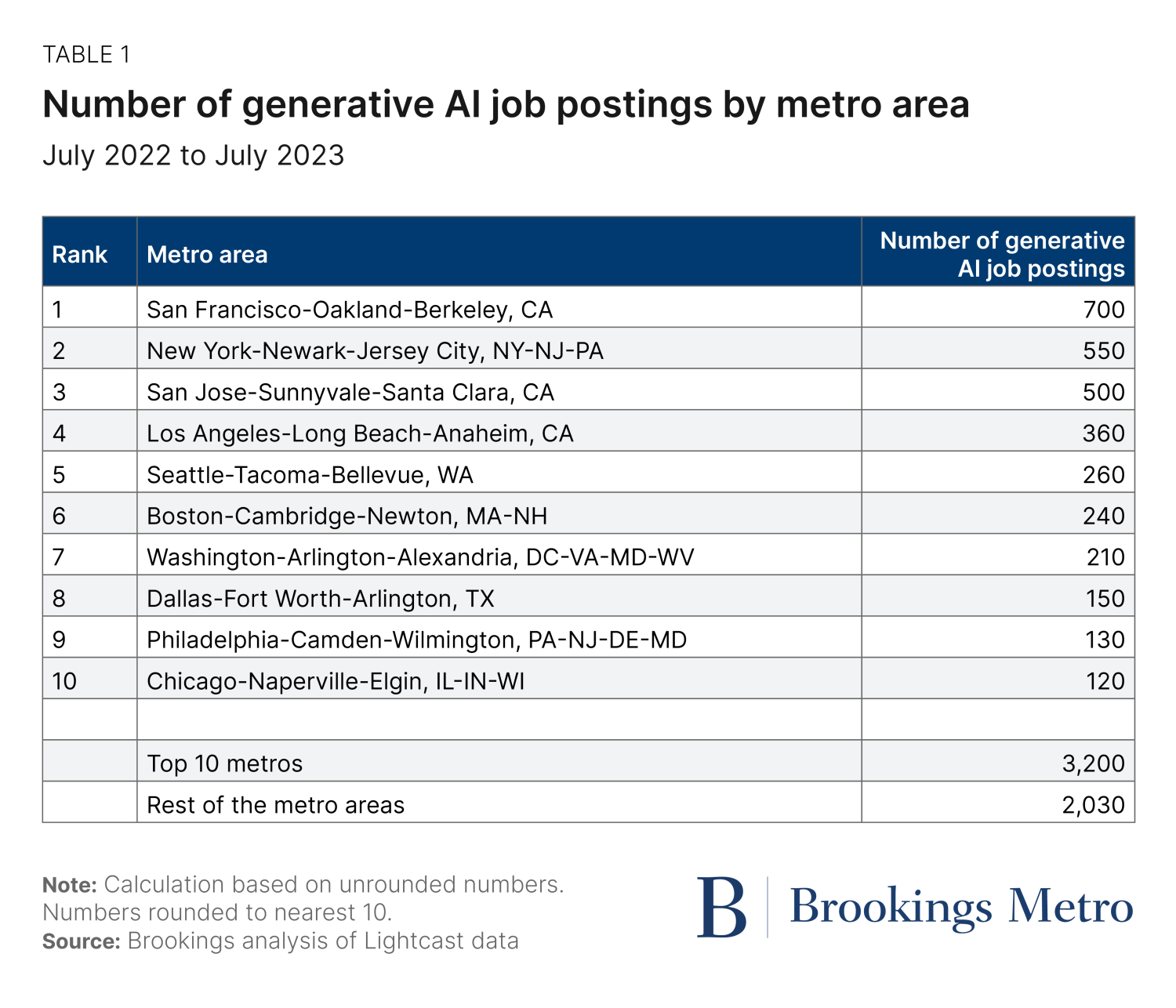

A Brookings Report says AI job postings happen in the same big tech hubs:

FT summarizes a Goldman Sachs report on AI displacing workers

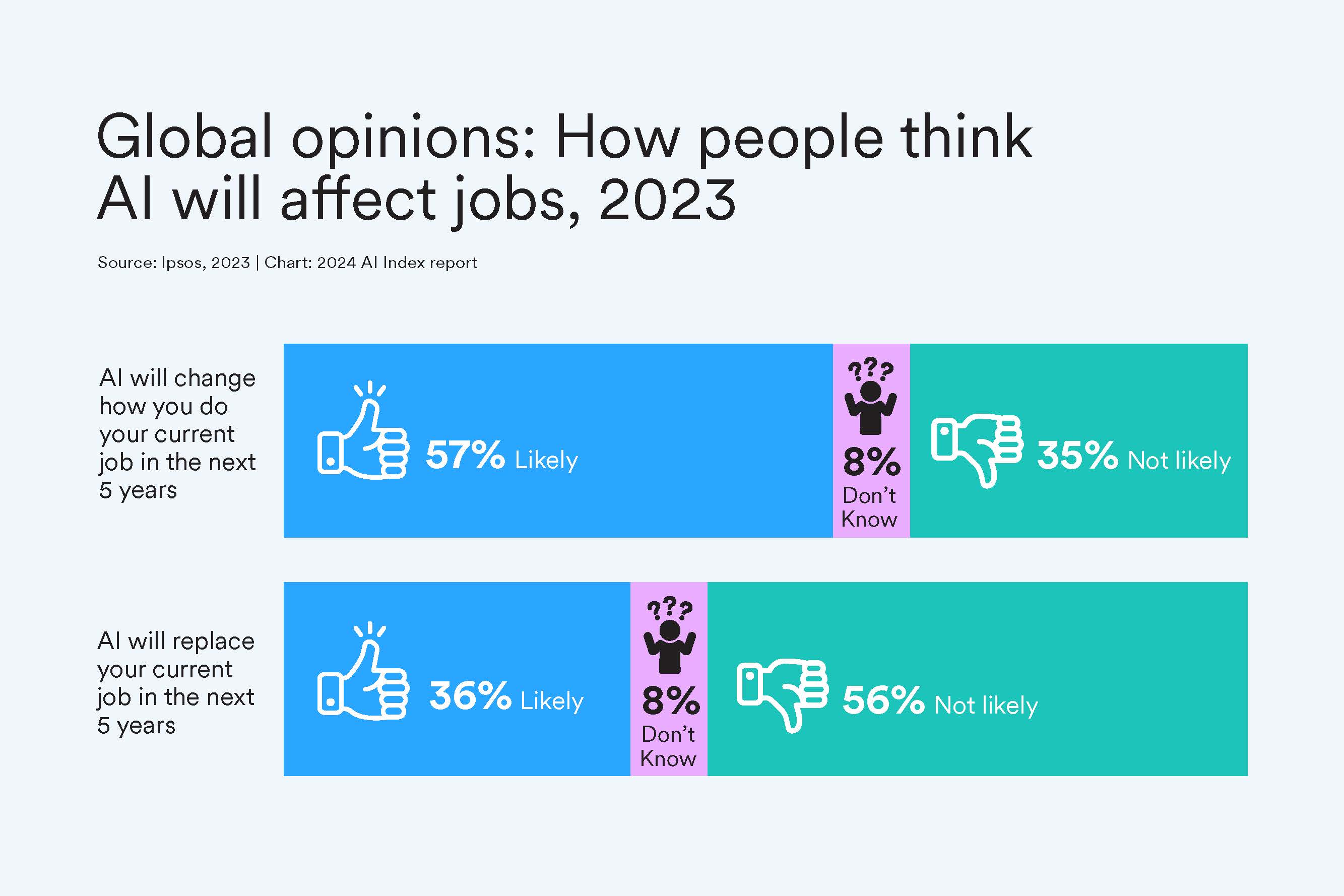

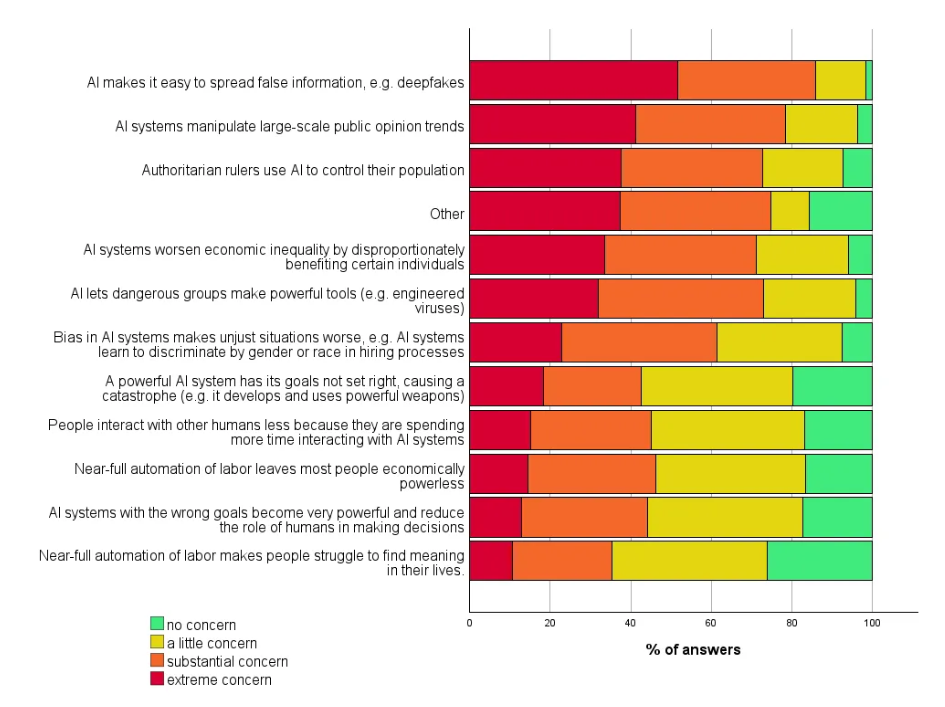

and Stanford’s May 2024 AI Index Report

Tyler Cowen summarizes Scenarios for the transition to AGI including a new NBER working paper by Anton Korinek and Donghyun Suh, plus a recent Noah Smith piece on employment as AI proceeds. And a recent Belle Lin WSJ piece, via Frank Gullo, “Tech Job Seekers Without AI Skills Face a New Reality: Lower Salaries and Fewer Roles.” And here is a proposal for free journalism school for everybody (NYT, okie-dokie!).

Brad deLong DRAFT: Notes: What Is the Techno-Optimist Slant on “AI”?

predicts

(1) Very large-scale very high-dimension regression and classification analysis is going to be, if we can manage to tame and subdue it, truly game-changing: the transformation from the world of the bureaucracy to the world of the algorithm, with not just Peter Drucker’s mass production, not just Bob Reich’s flexible customization, but rather bespoke creation for nearly everything. This is the heart of modern MAMLM-GPTM-LLM technologies. The use of vast datasets to identify patterns and make predictions will be profound in sectors from finance to healthcare. This is likely to be the biggest effect. It is also very hard to estimate—for if we could estimate how big it will be and what it will be good for, we would have already been able to do much of it.

and “auto-complete on steroids”, i.e. a time-saving and easier way to finish thoughts, and entertainment (electronic pets)

He adds

Apropos of nothing useful, this is yet another reason to dismiss AI-Alignment gurus as people who have truly serious daddy issues, plus—in their belief that we are the crucial generation that is under existential threat and will set the moral compass in stone for all of humanity’s future, because we are on the verge of creating truly superhuman superaccelerating intelligence, and that the key players in doing so are moral philosophers with Silicon Valley connections—a degree of grandiose narcissism that I have not had since the days when I thought I was the only intelligent creature in the universe, and was only beginning to wonder if perhaps there might be another mind inside the giant milk source that appeared to be chirping at me:

and recommends Wilcox NT. AGAINST SIMPLICITY AND COGNITIVE INDIVIDUALISM. Economics and Philosophy. 2008;24(3):523-532. doi:10.1017/S0266267108002137

Expert Forecasts

A October 2023 survey of attendees at AI conferences concludes that progress is happening faster than many expected, but that opinions are highly-fragmented.

Scott Alexander summarizes the results of a large survey of AI Experts, one taken in 2016 and another in 2022. Although the first one seemed uncannily accurate on some of the predictions – e.g. likelihood AI could write a high school essay in the near future – a closer look at the survey wording reveals that the experts were mostly wrong.

Bounded-Regret is a site that makes calculated forecasts about the future of AI.

For example, the claim that by 2030, LLM speeds will be 5x the words/minute of humans:

benchmarking against the human thinking rate of 380 words per minute (Korba (2016), see also Appendix A). Using OpenAI’s chat completions API, we estimate that gpt-3.5-turbo can generate 1200 words per minute (wpm), while gpt-4 generates 370 wpm, as of early April 2023.

Benedict Evans thinks Unbundling AI is the next step. ChatGPT is too general, like a blank Excel page. And as with Excel, maybe a bunch of templates will try to guide users but ultimately each template just wants to be a specialized company. We await a true paradigm shift, as an iPad is to pen computing, we want LLMs that do something truly unique that can’t be done other ways.

Bill Gates thinks GPT technology may have plateaued and that it’s unlikely GPT-5 will be as significant an advance as GPT-4 was.

MIT Economist Daron Acemogulu argued that the the field could be in for a “great AI disappointment”, suggesting that “Rose-tinted predictions for artificial intelligence’s grand achievements will be swept aside by underwhelming performance and dangerous results.”

Alex Pan My AI Timelines Have Sped Up (Again) bets AGI will happen in 2028 (10% chance), 2035 (25%), 2045 (50%) and 2070 (90%):

Every day it gets harder to argue it’s impossible to brute force the step-functions between toy and product with just scale and the right dataset. I’ve been converted to the compute hype-train and think the fraction is like 80% compute 20% better ideas. Ideas are still important - things like chain-of-thought have been especially influential, and in that respect, leveraging LLMs better is still an ideas game.

Prediction Markets

LessWrong makes predictions

ChatGPT is pretty bad at making predictions, according to dynomight, who compared its predictions to those from the Manifold prediction market.

At a high level, this means that GPT-4 is over-confident. When it says something has only a 20% chance of happening, actually happens around 35-40% of the time. When it says something has an 80% chance of happening, it only happens around 60-75% of the time.

The Manifold Prediction Market (Feb 2024) projects what GPT technology will look like in 2025 (via Zvi)

Zvi Mowshowitz: GPT-4 Plugs In: ’We continue to build everything related to AI in Python, almost as if we want to die, get our data stolen and generally not notice that the code is bugged and full of errors. Also there’s that other little issue that happened recently. Might want to proceed with caution…

Culture

Culture critic Ted Gioia says ChatGPT is the slickest con artist of all time

And Black Mirror creator, Charlie Brooker, who at first was terrified, quickly became bored:

“Then as it carries on you go, ‘Oh this is boring. I was frightened a sec ago, now I’m bored because this is so derivative.’

“It’s just emulating something. It’s Hoovered up every description of every Black Mirror episode, presumably from Wikipedia and other things that people have written, and it’s just sort of vomiting that back at me. It’s pretending to be something it isn’t capable of being.”

” AI is here to stay and can be a very powerful tool, Brooker told his audience.

“But I can’t quite see it replacing messy people,” he said of the AI chatbot and its limited capacity to generate imaginative storylines and ingenious plot twists.

AAAI 1989 paper describing an approach to AI intended to ” establish new computation-based representational media, media in which human intellect can come to express itself with different clarity and force.” The Mind at AI: Horseless Carriage to Clock

AI Doesn’t Have to be Perfect

A16Z Martin Casado and Sarah Wang The Economic Case for Generative AI and Foundation Models argue:

Many of the use cases for generative AI are not within domains that have a formal notion of correctness. In fact, the two most common use cases currently are creative generation of content (images, stories, etc.) and companionship (virtual friend, coworker, brainstorming partner, etc.). In these contexts, being correct simply means “appealing to or engaging the user.” Further, other popular use cases, like helping developers write software through code generation, tend to be iterative, wherein the user is effectively the human in the loop also providing the feedback to improve the answers generated. They can guide the model toward the answer they’re seeking, rather than requiring the company to shoulder a pool of humans to ensure immediate correctness.

Footnotes

based on his paper The Simple Economics of AI↩︎