Engineering

How will AI change the practice of engineering

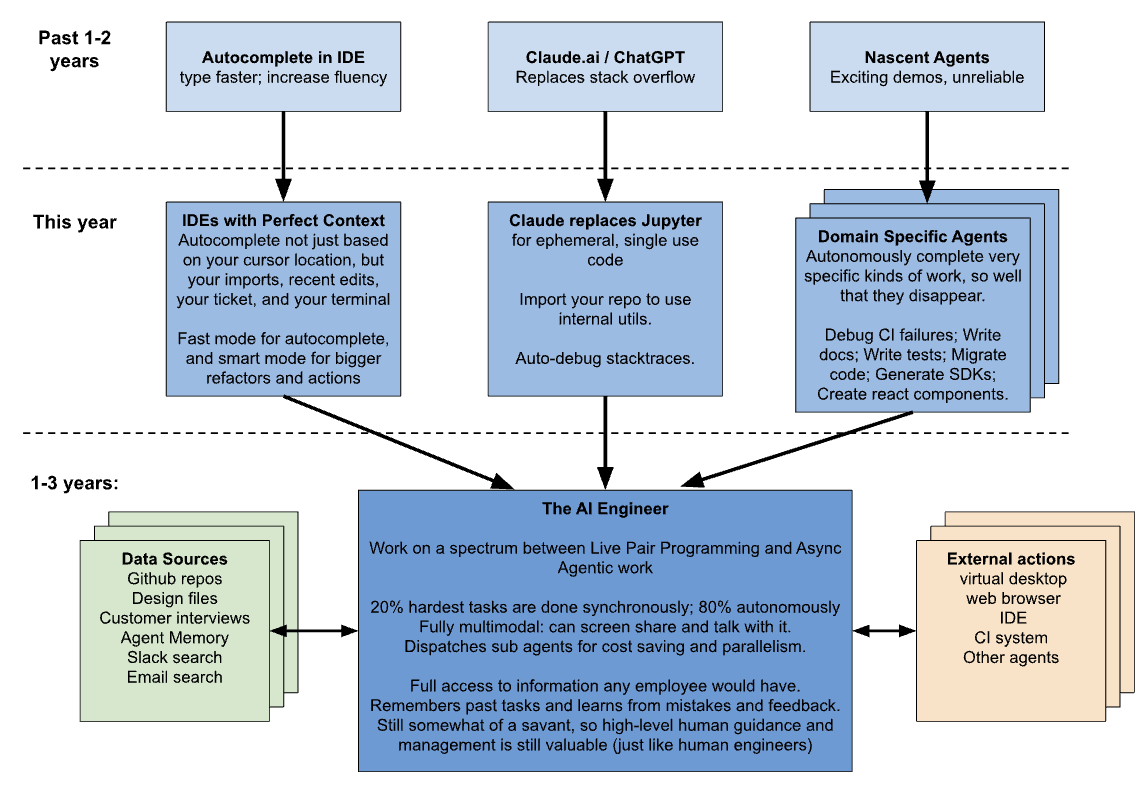

An Anthropic engineer speculates about the future of AI-based coding : autonomously for well-scoped tasks, and pair programming for the hardest ones.

IEEE Spectrum (Jun 2024) reports on a survey of ChatGPT for coding The study was published in the June issue of IEEE Transactions on Software Engineering. Yutian Tang is a lecturer at the University of Glasgow.

ChatGPT was good at algorithms invented before 2021, but performed much poorer after that.

But it was able to generate code with smaller runtimes and memory overhead.

Tang recommends giving ChatGPT more context and be more explicit in the prompt about watching for code vulnerabilities, etc.

Hacker News lengthy thread on developers who feel disillusioned by AI

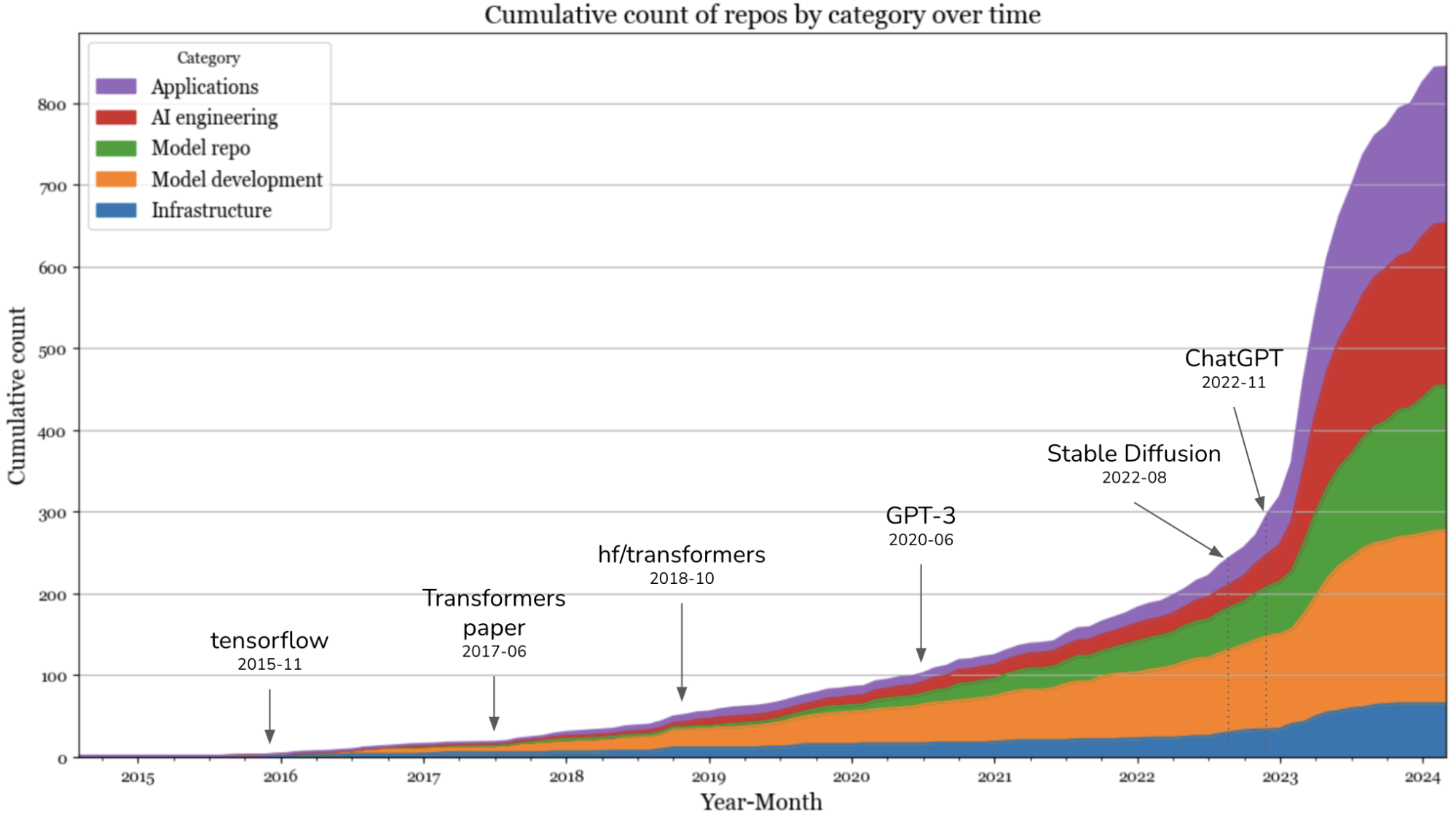

Chip Huyen: What I learned from looking at 900 most popular open source AI tools

also see Developers and what they’re up to

Matt Welsh in a lecture at Harvard says

The field of Computer Science is headed for a major upheaval with the rise of large AI models, such as ChatGPT, that are capable of performing general-purpose reasoning and problem solving. We are headed for a future in which it will no longer be necessary to write computer programs. Rather, I believe that most software will eventually be replaced by AI models that, given an appropriate description of a task, will directly execute that task, without requiring the creation or maintenance of conventional software. In effect, large language models act as a virtual machine that is “programmed” in natural language. This talk will explore the implications of this prediction, drawing on recent research into the cognitive and task execution capabilities of large language models.

Google co-founder Sergei Brin says

AI could get quite good at programming, but you could say that about any field of human endeavor. So, I probably wouldn’t single out programming and say, “Don’t study that specifically.” I don’t know if that’s a good answer.

“The Model is the Computer” Sam Altman: “ChatGPT is an eBike for the mind”

How much more can these models scale? Current models are looking only at the tip of the iceberg of data on the internet.

See Using Fixie.AI

Seattle-based GitClear published examined four years of data of 150M lines of code and concluded that the faster code added thanks to AI may contribute to “churn”, and add to technical debt in the way that haphazard projects copy/pasted by lots of uncoordinated developers.

CodeGen raised $16M to develop agent-based code writing

Whereas Copilot, CodeWhisperer and others focus on code autocompletion, Codegen sees to “codebase-wide” issues like large migrations and refactoring (i.e. restructuring an app’s code without altering its functionality).

Matt Rickard How AI Changes Workflows

Maybe issue tracking comes before code in future DevOps platforms? Does the code need to be checked in?

Product Management

Lenny Rachitsky says AI will impact product management arguably more than it does software engineering because it’s mostly a people and communication skill. AI will mostly affect how the product vision and strategy are developed as well as the writing of requirements documents:

This is already happening with tools like ChatPRD. Describe what you want in human language, get an 80% complete draft, refine it, and then ship

Tools will make it significantly easier to find the signal in the noise. However, customers will still want to talk to real people to share their honest challenges, ideas, and experiences. Skills like empathy, communication, and creativity will become increasingly important for connecting with customers.

Tools to explore: Dovetail, Sprig, Kraftful, Notably, Viable, Maze, and a bunch of examples from readers

Kyle Poyar interviews dozens of product managers people to non-obvious advice

UX Implications

Vishnu Menon gives his Language Model UXes in 2027:

- Chat consolidation

- Persistence across uses

- Universal access (multimodality)

- Commodified, local LLMs

- Dynamically Generated UI & Higher Level Prompting

- Proactive interactions

Arguments against AI Coding

AI systems will do whatever you tell them, but often that’s not what you want. A senior engineer or software architect is often most useful at recommending that you not do something, even though it’s technically possible 1. An extreme example is a case when doing the work would cause security or other problems. But a more mundane problem is that the software becomes less maintainable over time.

David Eastman (Nov 2024) reminds Why LLMs Within Software Development May Be a Dead End: when you “outsource” your business to an LLM maker whose training dataset and processes you don’t understand, you are giving up control of your software. Until LLMs let you break down tasks into easily understandable chunks, developers who depend on LLMs will be at the mercy of those who develop the LLMs.

Is it possible to program non-trivial applications and customize code without knowing much about programming? walks through an attempt to get newbies to write a Flappy Bird-style game using ChatGPT. Concludes that it’s very difficult.

And this great comment from miraculixx

This is because the use of (a programming) language has never been the real challenge of writing code. The real challenge is knowing what you want, and to be able to break it down in sufficient detail for the result to be useful, be that a game or some other applications. This process requires at least two traits: the ability to think in abstract terms and the ability to think logically. These two traits are very rare in people (and non existent in machines). For this reason alone there will always be some people who are better at producing code, and it does not matter what tool they use. At best ChatGPT & co. are tools that make these people more productive

See also Edsger Dykstra’s essay On the foolishness of “natural language programming”

Github commissioned a study that estimates these tools could save developers up to 30% of their time, which could lead to an economic impact of $1.3 trillion in the United States alone.

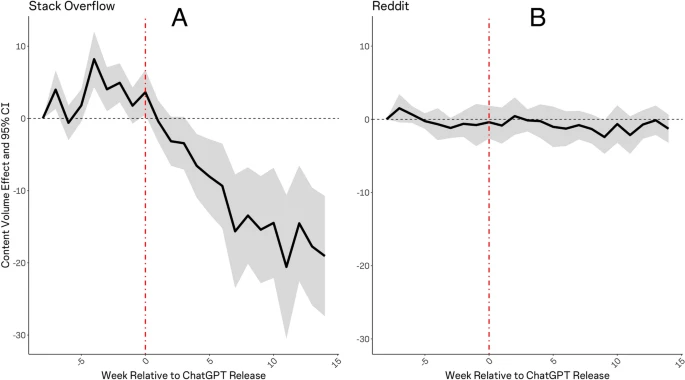

and StackOverflow usage has plunged from 14 to 10M page views since Nov 2022.

Another academic study calculates a 25% drop and warns about the implications on social sharing (and ultimately LLM training data). (see HN) R Maria del Rio-Chanona, Nadzeya Laurentsyeva, Johannes Wachs, Large language models reduce public knowledge sharing on online Q&A platforms, PNAS Nexus, Volume 3, Issue 9, September 2024, pgae400, https://doi.org/10.1093/pnasnexus/pgae400

But note that ChatGPT usage has a disproportionate effect on sites like StackOverflow that specialize in specific answers to questions. Reddit, which is more of a social community, sees little change in usage.

Burtch, Lee, and Chen (2024)

The Rise of the AI Engineer nice Substack writeup of the differences between AI Engineering and machine learning.

Implications for Software Engineering

One comparison between people with and without access to ChatGPT showed a 50% efficiency advantage with the technology.

On the other hand, a Dec 2022 Stanford study concludes that people who use AI Code Assistants write less secure code.

IEEE Spectrum Jun 2024 says 🜸 AI Copilots Are Changing How Coding Is Taught: “Professors are shifting away from syntax and emphasizing higher-level skills”

[!NOTE] Another vital expertise is problem decomposition. “This is a skill to know early on because you need to break a large problem into smaller pieces that an LLM can solve,” says Leo Porter, an associate teaching professor of computer science at the University of California, San Diego.

Daniel Zingaro, an associate professor of computer science at the University of Toronto Mississauga. “This is such a narrow view of what it means to be a software engineer, and I just felt that with generative AI, I’ve managed to overcome that restrictive view.”

Zingaro coauthored a book on AI-assisted Python programming with Porter.

Chat Oriented Program (CHOP)

Cody is an IDE extension that looks through your code to provide context for your queries.

A web version lets you study any open source code base.

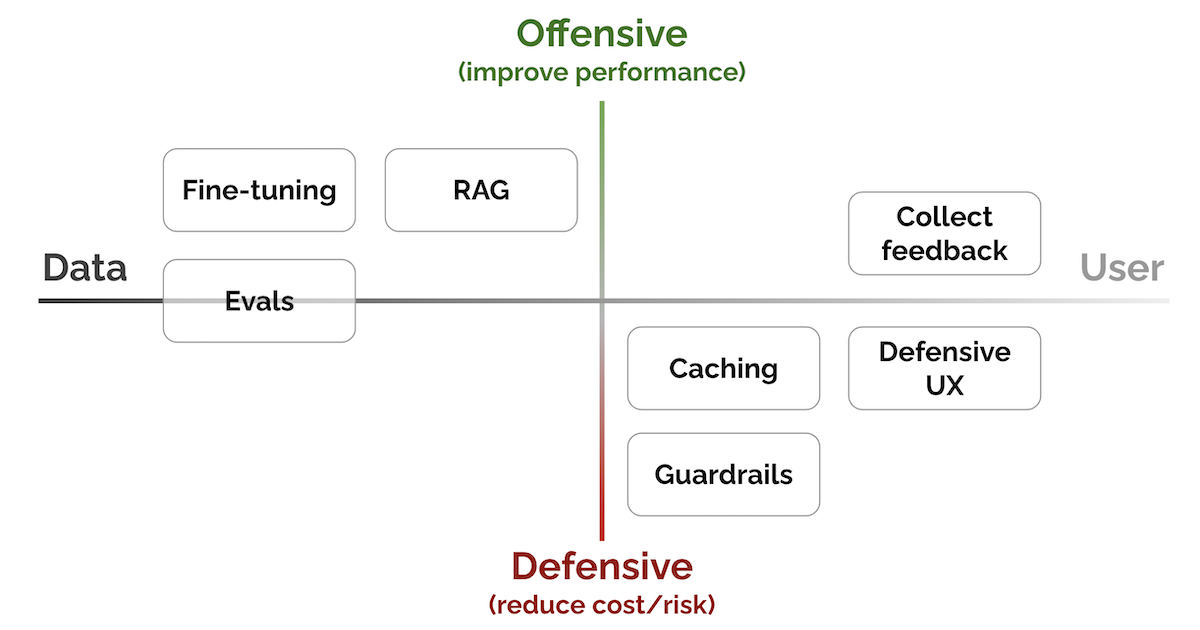

Patterns for LLM-based Products

Eugene Yan has a lengthy breakdown of Patterns for Building LLM-based Systems & Products

- Evals: To measure performance

- RAG: To add recent, external knowledge

- Fine-tuning: To get better at specific tasks

- Caching: To reduce latency & cost

- Guardrails: To ensure output quality

- Defensive UX: To anticipate & manage errors gracefully

- Collect user feedback: To build our data flywheel

Building LLMs

A comprehensive guide to building RAG-based LLM applications for production.

Base LLMs (ex. Llama-2-70b, gpt-4, etc.) are only aware of the information that they’ve been trained on and will fall short when we require them to know information beyond that. Retrieval augmented generation (RAG) based LLM applications address this exact issue and extend the utility of LLMs to our specific data sources.

Miessler’s Proposed Architecture

Information security professional Daniel Miessler (ME-slur) proposes: SPQA: The AI-based Architecture That’ll Replace Most Existing Software